Imagine walking into a room filled with two of the brightest minds in the world of AI—Claude and ChatGPT. Both are ready to answer your questions, help with tasks, and make your life easier. But here’s the catch: they’re not the same. In our blog post, “Claude vs. ChatGPT: What’s the Difference? in 2024,” we’ll explore how these two AI giants have unique strengths and features that set them apart.

Are you curious to know how Claude, the creation of Anthropic, stacks up against OpenAI’s ChatGPT? How do their answers differ? Which one understands your needs better? Whether you’re a tech enthusiast, a business professional, or just someone who loves exploring new technology, understanding the differences between Claude and ChatGPT could be a game-changer for you.

In this blog post, we’ll dive into their capabilities, quirks, and what makes each one special. Get ready to uncover the fascinating world of AI through a comparison that’s sure to surprise you! So, buckle up, and let’s embark on this exciting journey of discovery together.

Table of Contents

Claude vs. ChatGPT: What’s the difference? in 2024

Claude and ChatGPT are driven by similarly advanced language models. However, they have some notable differences. ChatGPT stands out for its versatility, offering features like image generation and internet access. On the other hand, Claude provides more affordable API access and can handle larger amounts of data at once due to its bigger context window.

Here’s a brief overview of what sets these two AI models apart.| Feature | Claude | ChatGPT |

|---|---|---|

| Company | Anthropic | OpenAI |

| AI Models | Claude 3.5 Sonnet Claude 3 Opus Claude 3 Haiku | GPT-4o GPT-4 GPT-3.5 Turbo |

| Context Window | 200,000 tokens (up to 1,000,000 tokens for certain use cases) | 128,000 tokens (GPT-4o) |

| Internet Access | No | Yes |

| Image Generation | No | Yes (DALL·E) |

| Supported Languages | English, Japanese, Spanish, French, and many others | 95+ languages |

| Paid Tier | $20/month for Claude Pro | $20/month for ChatGPT Plus |

| Team Plans | $30/user/month; includes Projects feature for collaboration | $30/user/month; includes workspace management features and shared custom GPTs |

| API Pricing (Input Tokens) | – $15 per 1M input tokens (Claude 3 Opus) – $3 per 1M input tokens (Claude 3.5 Sonnet) – $0.25 per 1M input tokens (Claude 3 Haiku) | – $5 per 1M input tokens (GPT-4o) – $0.50 per 1M input tokens (GPT-3.5 Turbo) – $30 per 1M input tokens (GPT-4) |

| API Pricing (Output Tokens) | – $75 per 1M output tokens (Claude 3 Opus) – $15 per 1M output tokens (Claude 3.5 Sonnet) – $1.25 per 1M output tokens (Claude 3 Haiku) | – $15 per 1M output tokens (GPT-4o) – $1.50 per 1M output tokens (GPT-3.5 Turbo) – $60 per 1M output tokens (GPT-4) |

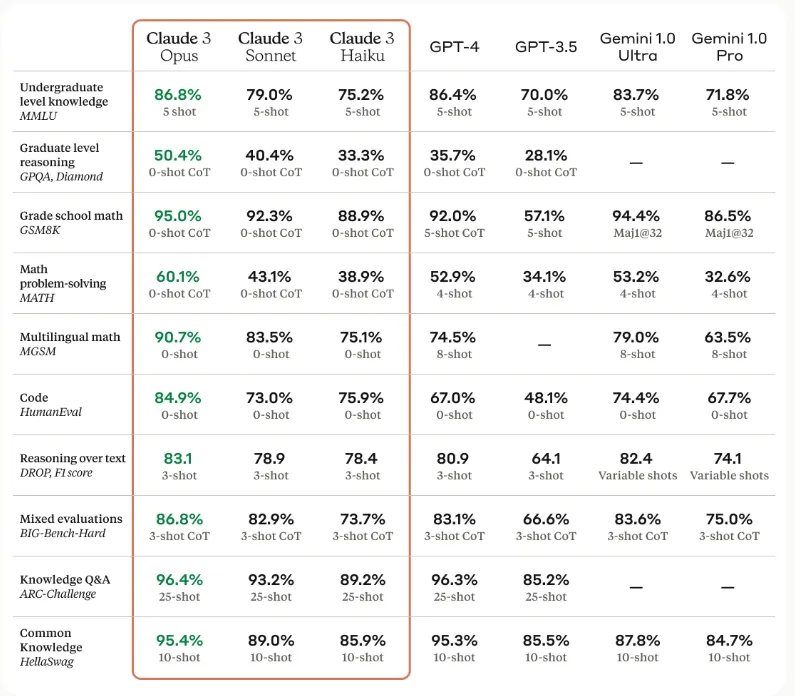

To evaluate the performance of different language models, AI companies use benchmarks similar to standardized tests. OpenAI’s assessments of GPT-4o demonstrate strong results on tests like MMLU, which evaluates undergraduate-level knowledge, and HumanEval, which assesses coding skills. Conversely, Anthropic has released a direct comparison of Claude, ChatGPT, Llama, and gemini, showing that its Claude 3.5 Sonnet model outperforms GPT-4o in most evaluations.

While these benchmarks are undoubtedly useful, some machine learning experts believe that this type of testing might overstate the progress of language models. New models might unintentionally be trained on their own evaluation data, leading to better performance on standardized tests. However, they may struggle with new variations of those same questions.

To understand how each model performs in everyday tasks, I conducted my own comparisons. Here’s a high-level overview of my findings.

| Task | Winner | Observations |

|---|---|---|

| Creativity | Claude | Claude’s default writing style is more human-sounding and less generic. |

| Proofreading and Fact-checking | Claude | Both do a good job spotting errors, but Claude is a better editing partner because it presents mistakes and corrections more clearly. |

| Image Processing | Tie | Neither Claude nor ChatGPT is 100% accurate at identifying objects in images, and both have issues with counting. Both provide remarkable insights into uploaded images. |

| Logic and Reasoning | ChatGPT | Both perform capably in tasks like math, physics, and riddles. However, GPT-4o is a more trustworthy partner for complex equations. |

| Emotion and Ethics | Tie | Earlier iterations of Claude felt more “human” and empathetic, but Claude 3.5 and GPT-4o take an equally robotic approach. |

| Analysis and Summaries | ChatGPT | Despite Claude 3.5’s larger context window, GPT-4o processed much larger documents in tests and provided more accurate analysis. |

| Coding | Claude | Claude 3.5 is a more capable coding assistant, with its Artifacts feature providing an interactive interface to see the results of your code immediately. |

| Integrations | ChatGPT | ChatGPT’s native DALL·E image generation, internet access, and third-party GPT integrations offer capabilities beyond Claude’s standard offering. |

Continue reading to discover how Claude and ChatGPT performed on each task.

Claude excels as a partner for creativity

When ChatGPT was first released, I, like many others, began by generating amusing Shakespearean sonnets. One example was about avoiding the gym: “How oft I vow to break this slothful spell, To don the garb of toil and sweat; But when the morrow comes, alas, I dwell, In lethargy, and naught but regret.”

Despite ChatGPT’s strengths as a creative assistant, its output can sometimes seem generic and overly flowery. It often relies on certain words too much, leading to phrases like “Let’s delve into the ever-changing tapestry of” which have become telltale signs of AI-generated content. Although clever prompting can mitigate this issue, Claude generally produces more human-like responses right from the start.

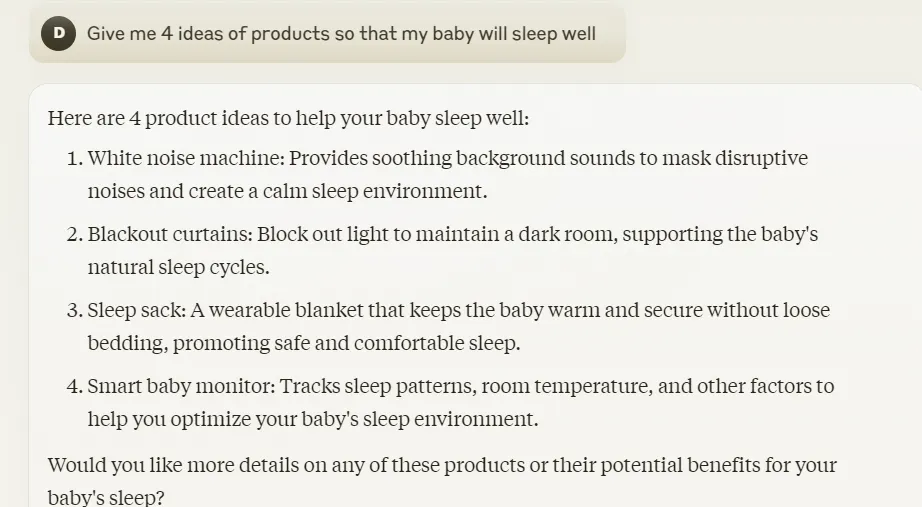

Assessment #1: Idea Generation

I was given a task to both CHATGPT and CLAUDE “Give me 4 ideas of products so that my baby will sleep well” Here is the result

Claude

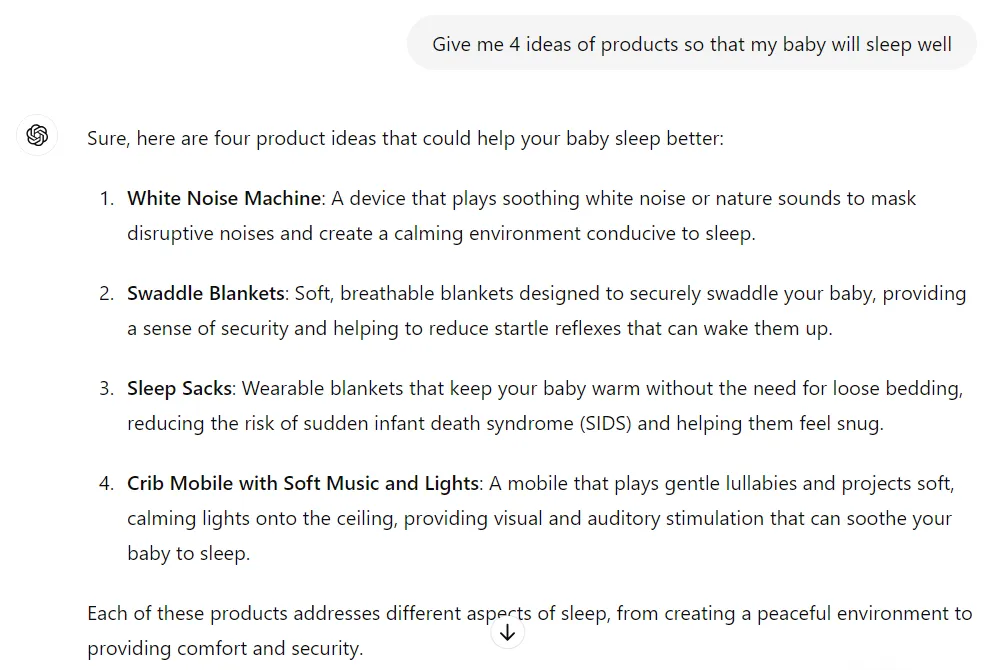

Here is the same prompt to the CHATGPT,

Both are nearly close results, but Claude gives some technical results and chat GPT gives some daily basis results.

WINNER: tie

Assessment #2: Creativity

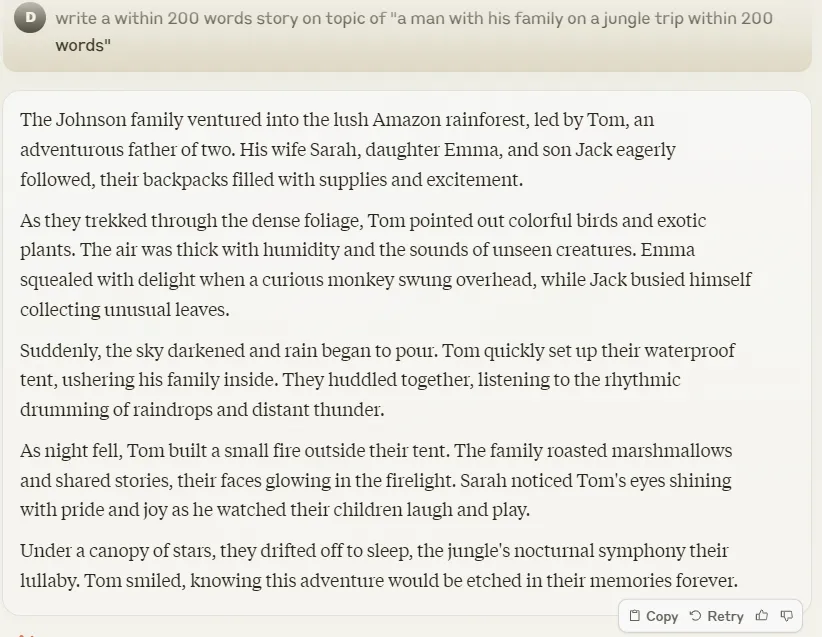

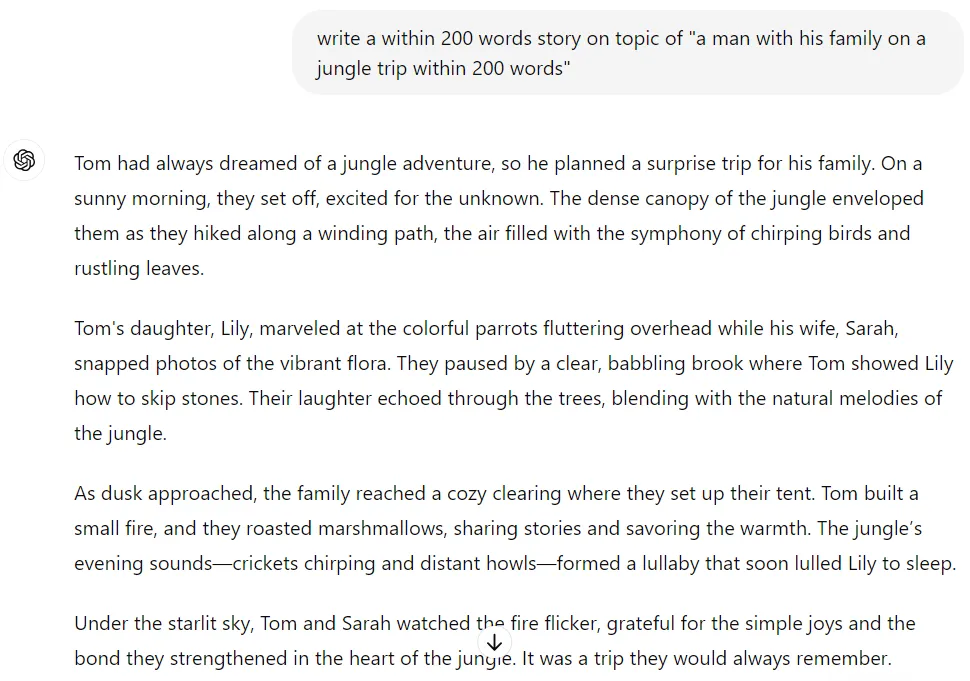

I was given to write a story on the topic “a man with his family on a jungle trip within 200 words” here is the both result

Claude

here is the way the whole story with a good amount of creativity

Chat GPT

Both are the same and equal results but Claude’s results are so deep on story, not any formalities.

Claude stands out as an exceptional editing assistant.

The potential for AI in proofreading and fact-checking is substantial, as it could potentially save human editors from countless hours of detailed review. However, its effectiveness has been somewhat hindered by the issue of inaccuracies: language models sometimes fabricate information rather than provide no response at all. In my comparison of Claude and ChatGPT with this consideration, I found Claude to be a more dependable and trustworthy editing companion.”

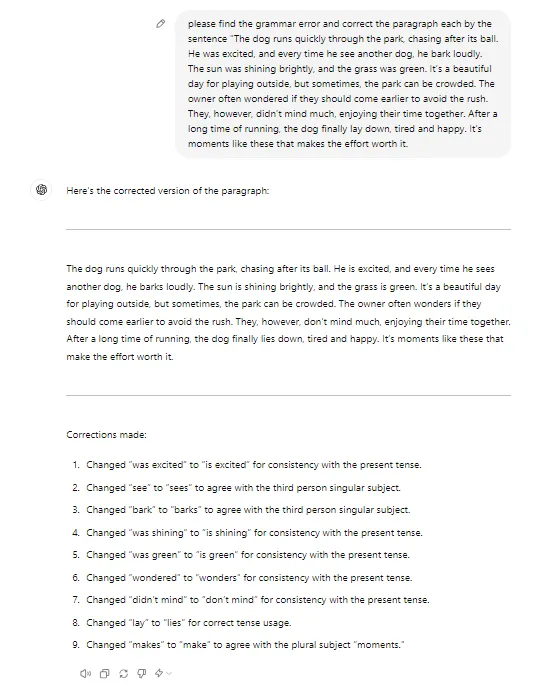

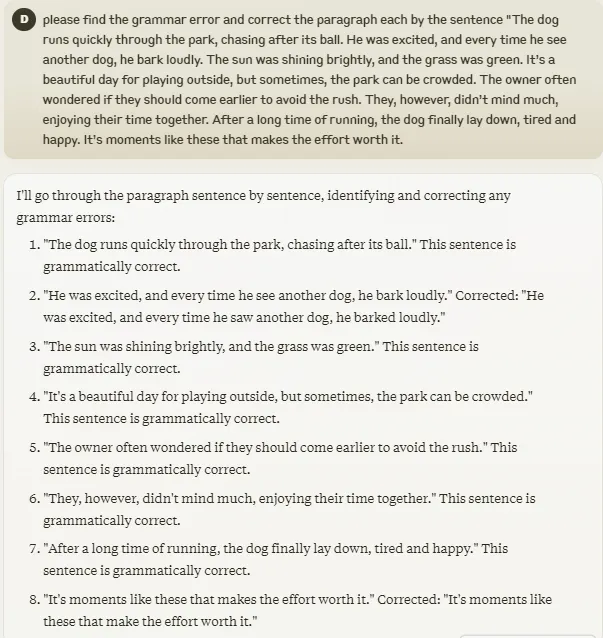

Assessment #3 Proofreading

I was given a grammatical error passage to see how it reacted to both

The dog runs quickly through the park, chasing after its ball. He was excited, and every time he see another dog, he barked loudly. The sun was shining brightly, and the grass was green. It’s a beautiful day for playing outside, but sometimes, the park can be crowded. The owner often wondered if they should come earlier to avoid the rush. They, however, didn’t mind much, enjoying their time together. After a long time of running, the dog finally lay down, tired and happy. It’s moments like these that makes the effort worth it.

Here is the result

Chat GPT

Claude

Both are doing good but Claude gives full of details.

Assessment #3 coding

I was given a simple game to code here the both results

As someone new to coding, I decided to dive into video game development, following a common beginner’s route. Claude’s guidelines for its Artifacts feature suggest creating individual characters and then assembling them into an interactive video game. Although I had some trouble getting this method to work perfectly, with a few prompts, I managed to recreate a version of the classic Frogger game and even play it directly within Claude’s interface. even Claude is so fast.

Claude vs. ChatGPT: Which AI Chatbot is Superior?

Claude and ChatGPT share many similarities, being advanced AI chatbots capable of handling tasks such as text analysis, brainstorming, and complex data processing. Observing either system tackle a challenging physics problem is quite impressive. However, depending on your specific needs, one might be more advantageous than the other.

For those seeking an AI companion for creative endeavors—such as writing, editing, brainstorming, or proofreading—Claude may be the preferable choice. Its outputs often come across as more natural and less formulaic compared to ChatGPT’s. Additionally, Claude 3.5 offers enhanced coding capabilities and more cost-effective API options.

On the other hand, if you need a versatile tool that can handle a wide range of tasks, ChatGPT stands out. It not only excels in text generation but also supports image creation, web browsing, and integration with custom GPTs designed for specialized fields like academic research. The latest update, GPT-4o, introduces even greater power and speed, enhancing its overall performance.